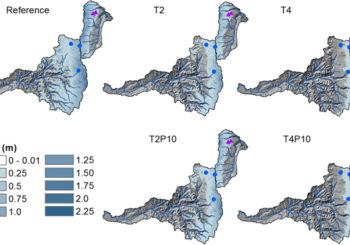

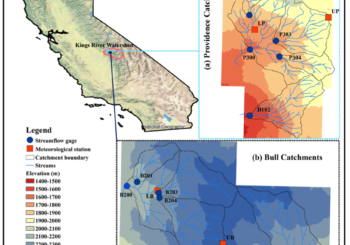

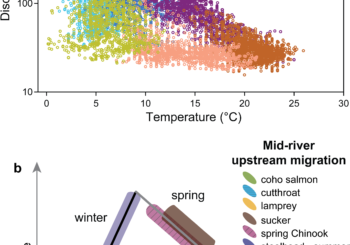

Record low snowpack conditions were observed at Snow Telemetry stations in the Cascades Mountains, USA during the winters of 2014 and 2015. We tested the hypothesis that these winters are analogs for the temperature sensitivity of Cascades snowpacks. In the Oregon Cascades, the 2014 and 2015 winter air temperature anomalies were approximately +2 °C and +4 °C above the climatological mean. We used a spatially distributed snowpack energy balance model to simulate the sensitivity of multiple snowpack metrics to a +2 °C and +4 °C warming and compared our modeled sensitivities to observed values during 2014 and 2015. We found that for each +1 °C warming, modeled basin-mean peak snow water equivalent (SWE) declined by 22%–30%, the date of peak SWE (DPS) advanced by 13 days, the duration of snow cover (DSC) shortened by 31–34 days, and the snow disappearance date (SDD) advanced by 22–25 days. Our hypothesis was not borne out by the observations except in the case of peak SWE; other snow metrics did not resemble predicted values based on modeled sensitivities and thus are not effective analogs of future temperature sensitivities. Rather than just temperature, it appears that the magnitude and phasing of winter precipitation events, such as large, late spring snowfall, controlled the DPS, SDD, and DSC.

Link for full length paper:

http://iopscience.iop.org/article/10.1088/1748-9326/11/8/084009/meta